.

## State of AR/VR & the Computer Graphics and User Interfaces Lab

Steve K. Feiner, Columbia University

**Steven K. Feiner** is a Professor of Computer Science at Columbia University, where he directs the Computer Graphics and User Interfaces Lab. His research interests include human-computer interaction, augmented reality and virtual reality, 3D and 2D user interfaces, automated design of graphics and multimedia, mobile and wearable computing, health applications, computer games, and information visualization.

Feiner in detailing the work being conducted in his lab and the technologies underlying (XR), provided formal definitions for these technologies:

## What is Virtual Reality (VR)?

VR refers to the idea of a computer-generated world, consisting of virtual media that are 3D, that are interactive, and that are tracked relative to the user.

## What is Augmented Reality (AR)?

AR also refers to the idea of a computer-generated world consisting of virtual media, plus the idea that this virtual media is registered in 3D with the perceptible real-world itself.

For us to experience these technologies, headset displays were developed. Some headsets displays also are equipped with audio. AR and VR can address any or all of our senses. This means it’s not just what we see, but what we hear, feel, and even taste and smell, with more focus on what we visually see than anything else.

## Background

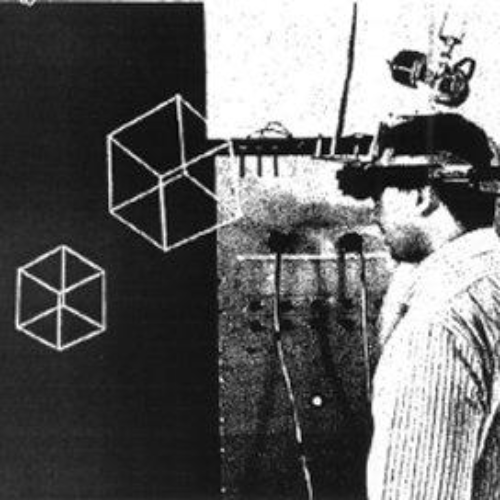

It may seem based on the increase in popularity of this technology that it is relatively new, however, there are over 50 years worth of research on VR and AR. Ivan Sutherland created one of the first head-worn displays, published close to 51 years ago, he showcased at the Association for Computing Machinery / Institute of Electrical and Electronics Engineers (ACM/IEEE) — Computer Society’s Fall Joint Computer Conference the headset having the ability to see a wireframe cube in 3D.

That conference was the same one in which Douglas Engelbart, did what is often known as the “Mother Of All Demos”, where he introduced people to the idea of a mouse, and of a computer being used to do text-processing, hypertext, and links between parts of a document in a collaborative multi-site demo with video and audio between the sites.

So at the same conference, in which people were being introduced to the beginnings of the future, with what we take for granted today in using Google Docs and MS Word, people were also seeing the first fully running AR/VR display.

So it’s sometimes very interesting how the technology that we think about as standard for interaction today, with what Engelbart presented, was developed at the same time as the technology that we are considering as emerging today with AR/VR. This may be due partly because in many ways it’s harder.

Feiner brings us back to the present and goes over the technologies that are being utilized in his lab:

## Types of AR Displays

Optical see-through displays: A small display that is communicated to the person via optics, this would be as simple as having a little piece of glass that’s reflecting the display letting you see into the world. Using this display, your view of the world is mixed with virtual media and your actual environment, simply using optics.

Video see-through displays: These displays require a computer that is generating virtual graphics, and those virtual graphics are being combined with the camera view and it is then processed by the machine and displayed to the user through video. Using this display, you’re not directly looking into your environment.

## These headset displays prompt the question, why now?

Well, many companies currently are producing headsets that are far more advanced than what researchers had in their labs not too many years ago. It’s very exciting that these devices can be bought for prices that are designed to be paid by the average consumer. The various companies such as Oculus, HTC, etc.

## What does this make possible?

This means that we can accurately track the position and orientation of your head in 3D, we can present to you, stereo graphics that are based on where you are relative to the virtual and real objects in your environment. We can present stereo audio relative to where you are and even spatialize that audio so it sounds like it’s coming from a place in the environment, and not sound like it’s coming from just in-between your ears.

There are bi-manual controllers compatible with being tracked fully in position and orientation, and soon Oculus plans to roll out hand tracking, allowing the user to interact without having to hold either of the controllers. Some devices do that at the moment, however, they do not function particularly well. Soon that will change, and we will see smooth and uninterrupted hand-tracking in commercial devices, and it will be done well. Many of the current headsets have eye-tracking as well.

Feiner then goes into detail on his research area, where they are looking at navigation for both indoors and outdoors, visualizing information, and assisting workers performing skilled tasks.

The main theme for the work that they do, is creating user-interfaces for XR. They begin by trying to answer the following research question: How can AR and VR help people accomplish their goals, individually or collectively, and do that effectively?

In the pursuit of this they have developed interaction techniques, visualization techniques, they created sample applications that include these techniques, and even running studies to try and see how the techniques have developed.

To get view his research in more detail please click here. {http://graphics.cs.columbia.edu/publications/}

# Panel: Convergence of AR & AI

Moderator: Stephanie Mehta — Editor-In-Chief of Fast Company

Panelists:

* Steve K. Feiner — Columbia University

* Tobin Asher — Stanford University, Associate Director of Partnerships, VHIL

* Aaron Williams — VP of Global Community at OmniSci

* Omer Shapira — Engineer and Artist at NVIDIA

The theme of this panel is the mashup of data science, artificial intelligence, machine learning and virtual reality, mixed reality and augmented reality.

## Why is it important for people on the AR/VR side to start engaging with their counterparts who specialize in AI and ML?

At his lab at Stanford, Tobin discussed how they study the psychological behavioral effects of VR, but more broadly, what are the societal impacts of virtual and augmented reality.

One of the things that comes up a lot in conversation right now is all the tracking data that’s out there. You know, every time that you’re in a headset to be able to position you in the virtual world you are being tracked many times a second. Tobin’s lab has run studies, which show how much information we can gather and learn about somebody with that tracking data using machine learning.

They had one study where there was a teacher and a student that came together in VR and the teacher had to convey certain information to the student. They then did a post-test questionnaire to understand how well the student learned while teaching was occurring. They were also collecting body tracking data, using machine learning algorithms to see if they could predict how well people learn from their body tracking data. They were able to predict with 85% accuracy, how well they learned. They studied to predict creativity, again, around 86% accuracy in predicting creativity and learning. So there’s a lot of implications for using tracking data when you start incorporating machine learning into what we know about people.

And then, of course, all these questions about data privacy, the companies that own the data, what happens to that data? Think about how much we know about people just simply based on their behavior when typing on the internet, what can we determine about their behavior when you know physically how a person is interacting?

Shapira explained that when you are doing something in VR, whatever it is, whether it’s playing a game or being social in VR, it is not about VR, it is about your senses and the data. So the entire ecosystem is data-oriented.

Therefore heavy processing of the data and delivery of that to humans in synced ways is the substratum of the experience, you have to start there. Whenever it comes to sensory augmentation and making people feel like they’re in the same room or making them feel like they’re on the moon, or whatever, that only comes after they’ve been given meaning in the place that they want to be in and the thing that they want to do.

So in the minds of artists, when they want to create experiences now or builders of business applications, when they want to create experiences there, the first thing that they have to start with is, where are we? What can we interact with? What can we collaborate with? And that’s all the data, so AI is the point to start when we’re talking about VR, and it’s kind of accidental, but it didn’t start that way.

## What role can people in the world play to help facilitate conversations and interaction between these two worlds, AR/VR and AI/ML? Is there a role for people to be able to bridge those gaps?

Aaron Williams believes we should start from those first principles and aim to understand what we want to do? What do we want to accomplish by using these tools and these interfaces that we have?

He’s always looking for that opportunity to take data, fast data, ubiquitous interactive data, and turn it into something that gives you a new perspective and new aspect on that data that adds to your experience with that data.

If all we had were pie charts, and we looked at data only through pie charts, we’d only get a very limited understanding of what that data was, but when we start to add all these different other ways of looking and interacting with the data, we start to see a much bigger picture. Now we can see billions of points on a map and interact with those billions of points in real-time, which starts to get interesting now from a 2D map.

How do we make that same leap now to a VR/AR world where we’re not just putting the 2D data into a 3D space. But instead, we’re starting to think about how do we get the one plus one equals three, what’s the additional data that we’re getting? That makes that sort of VR experience, add something, add some new context to this experience, and add new context to the data.

Looking at Google Earth, for example, of looking at San Francisco. If you look at San Francisco on a 2D map, it looks very flat. But if anybody you know has lived in San Francisco, been to San Francisco, you know, it’s anything but flat. And that context matters. So when we can take data from the city of San Francisco, for instance, all of the telemetry data for their buses shows a really interesting understanding of the circulation of vehicles within the city. And when we take that in and overlay it on a 2D map we get one perspective on that data, when we can see it on Google Earth, we can understand why certain patterns happened because of the topology of San Francisco.

We need to make sure that this conversation comes back to how do we make sure that these interfaces aren’t just used to put frosting on the cake, but instead are used to add context to this data that we have.

## What steps are being created to enhance the product, to enhance the art form, and to enhance the capability?

The biggest problem for anyone working in psychology is the will to want to know immediately the user’s wants and needs. This is not just for research right now because there’s a data economy and if you’re on social media you’re a product of that data economy because a lot of people care about your intention to buy, which your intent to buy is two levels back psychology, right?

Businesses now want to know, what is the user looking at? Where do they scroll faster? Does it have something to do with the ad that they just saw? Can we utilize that to make more money off the next app? All of these are components in how you form the data economy and because of the decisions that companies make to base their business model on.

## Are there any downsides to being able to take this information and data so quickly?

We’re talking about both the speed of the systems, but also, the speed of progress.

Speed gives you a lot of things. And it’s not even just sort of the interactivity of the data. It also gives you a scale of data. When you have speed, you no longer have to do this sort of downsampling and the kinds of tricks that you’ve used in the past to make data fast. You can keep your entire data set there and look at the entire data set.

But, the most important benefit of speed is curiosity. If it takes you an hour to get an answer back from your data, guess what? You’re not going to ask that data, many questions. And you’re going to be careful about the questions you do ask because the cost of that question is an hour of your time.

Whereas, when that question becomes instantaneous, suddenly you think about those questions differently, and you’re happy to throw trash questions at your data. It’s great, you can suddenly look at the data in whole new ways.

## What happens to the ethical line?

Because of a regulation called the General Data Privacy Regulation (GDPR) out of Europe. It’s the first step for some parts of the world to help people understand, really to help consumers understand what kinds of data are being collected, how that data is being used, and then limit what’s possible for these companies to do with that data once they’ve collected it.

Companies are actually incentivized to take user data for granted, and there’s one big remedy that is also generally trending with any company, and that is generating as much as possible synthetic data. Synthetic data is that instead of viewing someone’s living room, you create someone’s living room in 3D. And because you have a computer, you don’t get to create one, you get to create 1 million of those.

You can also create driving simulators that create road conditions that aren’t biased for good driving, that is biased for accidents or fire, and train robots with the dangerous real-world scenarios that robots may put themselves in themselves, or if they’re not operated by human only assisted by humans. And to do so, what’s needed is to be able to build tools for mimicking light the proper way, appropriating the images that you got to what comes out of the camera that you think will be taking those photos. Those are giant domains in machine learning, that need to be taken seriously, for the data economy, to shift from a permissive model where users are fair game, to a restrictive model where user data can be tested against instead of used to model after.

Columbia University’s Emerging Technologies Colloquium — Extended Realities (XR) and Data Science

The Emerging Technologies Consortium (ETC) is a group of like-minded people who are passionate about sharing information and fostering collaboration in the space of emerging technologies. These technologies include virtual and augmented reality, artificial intelligence, machine learning, robotics, quantum computing, 3D printing, wearables, and more.